Virtualization and Xen

# The Management Agent Installer was executed, but the PV-Drivers are not installed in the Device Manager # Causes and Solutions # Cause a) There can be leftovers from old Citrix XenServer Client Tools. Remove any xen. files from C: Windows system32 like xenbuscoinst72051.dll; xenvbdcoinst72040.dll; xenbusmonitor8215.exe. Download drivers for Xen GPL PV Driver Developers Xen PVUSB Device Driver other devices (Windows 10 x86), or install DriverPack Solution software for automatic driver download and update. The Windows PV Drivers are built individually into a tarball each. To install a driver on your target system, unpack the tarball, then navigate to either the x86 or x64 subdirectory (whichever is appropriate), and execute the copy of dpinst.exe you find there with Administrator privilege. For more information read the installation instructions.

In the finale of 2015 and considering the overall absence of articles on my blog this year, I thought of adding another piece of writing so that I don’t call this year a complete failure.

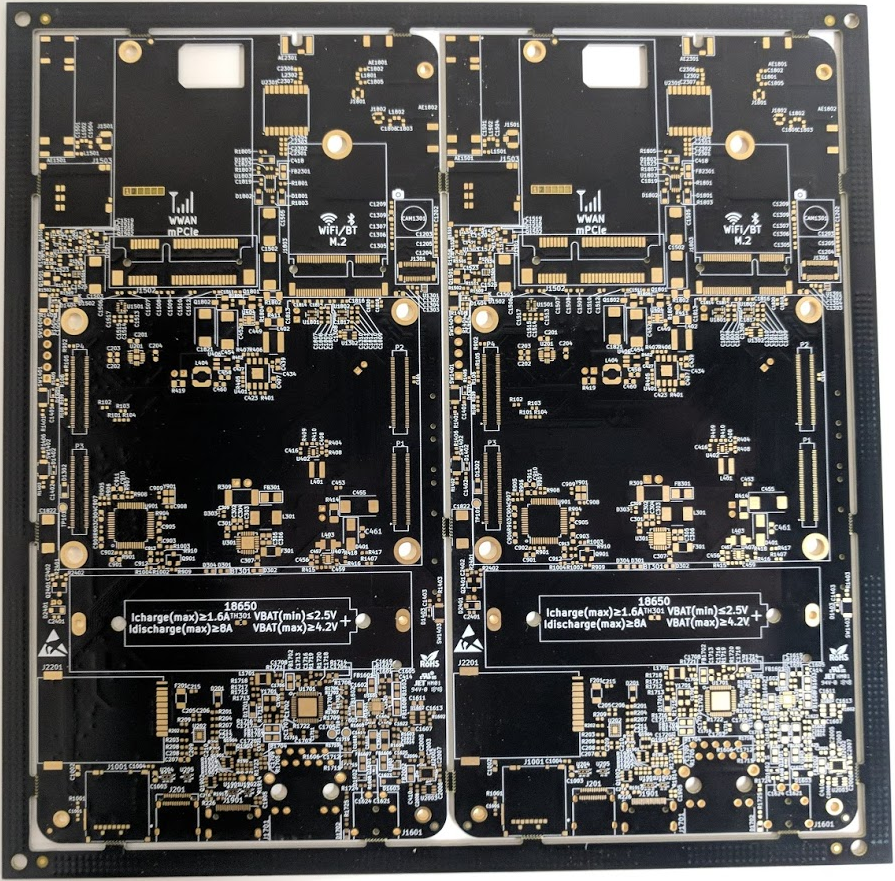

Drivers Xen Gpl Pv Driver Developers Motherboards Downloads

This is my attempt to easily explain virtualization concepts and Xen, including a glimpse into manual Xen hypervisor and guest installation on Debian 8. I’ll also briefly mention cloud computing and containers since these are related concepts, and modern developments. Have some coffee, this will be a long one.

Virtualization Concepts and Theory

Terminology

The following are key terms for this topic.

- Virtualization: Technology that enables virtual versions of resources or devices such as servers and operating systems, storage and network devices

- Server virtualization: Method of running multiple independent virtual operating systems on a single machine

- Host: Machine that runs the virtualization platform and the hypervisor

- Guest (VM): Virtual machine

- Hypervisor(Virtual Machine Monitor, VMM): Software that manages hardware resources and virtual machines

- Native or bare-metal hypervisor (type 1): Executes directly on Host’s hardware

- Hosted hypervisor (type 2): Executes inside a regular operating system

- Paravirtualization (PV): Paravirtualized Guests require a PV-enabled kernel and PV drivers, so the Guests are aware of the Hypervisor and can run without emulating hardware

- Full virtualization (HVM): Uses virtualization extensions from the Host’s CPU to virtualize Guests, using QEMU to emulate PC hardware including BIOS, IDE disk controller, VGA graphic adapter, USB controller, network adapter, etc

- Live migration: A technique for moving a running virtual machine to another physical host, without stopping it or the services running on it

- Cloud computing: Model of computing in which dynamic, scalable, and virtualized resources are provided as a service

- Container-based virtualization: Lightweight alternative to OS-level virtualization to run multiple isolated systems under a single kernel on a single host

Server Virtualization

Virtualization is a very broad term, with different definitions and perceptions, however in essence it deals with resource abstraction. We are interested in the most widespread understanding and use of virtualization – server virtualization.

Server virtualization is easiest to understand: it allows multiple virtual machines to share and run on the same physical hardware. This solved the problem of high cost underutilized servers sitting idle in data centers, by consolidating multiple physical servers into a single server that runs multiple virtual machines, allowing it to have much higher utilization rate.

The Host machine runs the Hypervisor, which is the most privileged software layer responsible for managing hardware resources and virtual machines. It allows concurrent execution of Guest machines. There are two types of Hypervisors:

- Native: Executes directly on the CPU, and not as kernel- or user-level software. Hypervisor administration may be performed by a privileged Guest, which can create and launch new Guests. This Hypervisor includes its own CPU scheduler for Guest VMs. Examples: Citrix XenServer, KVM, VMware ESX/ESXi, Microsoft Hyper-V

- Hosted: Executed by the Host OS kernel and may be composed of kernel-level modules and user-level processes. The host OS has privileges to administer the Hypervisor and launch new Guests. This hypervisor is scheduled by the Host’s kernel scheduler. Examples: VirtualBox

Drivers Xen Gpl Pv Driver Developers Motherboards Update

Note that the above distinction is not always clear. For example, the usual myth is that KVM is a hosted Hypervisor since it runs as a kernel module inside Linux, but it actually does run directly on hardware, making it a native Hypervisor…

There are a number of things that can be either virtualized or paravirtualized when creating a VM, and these include:

- Disk and network devices

- Interrupts and timers

- Emulated platform (motherboard, device buses, BIOS, legacy boot)

- Privileged instructions and pagetables (memory access)

Moreover, there are several approaches to virtualizing Guest machines: PV, HVM, HVM with PV drivers, PVHVM, and PVH.

Full virtualization is the early implementation where the hardware of the virtual machine is almost completely emulated in software. There are two implementation models of full virtualization:

- Binary translations: Provides a complete virtual system composed of virtualized hardware components onto which an unmodified operating system can be installed. This adds overhead for the Guest OS whenever it tries to access hardware since the commands must be translated from virtual to physical devices, and this happens at run time

- Hardware-assisted virtualization: The same as above, except it uses CPU support (AMD-V or Intel VT-x) to execute virtual machines more efficiently. This makes much simpler to virtualize the CPU, but other components still need to be virtualized (disk, network, motherboard, page tables, BIOS, etc). This is also known as Hardware Virtual Machine (HVM), and can be called hardware emulation. Since interfaces to provide disk and network access are complicated to emulate, we can also run Guests in fully virtualized mode with the addition of PV driversfor disk and network.

The Guest operating system does not have to be modified to run as a virtual machine in this mode.

In order to determine whether a CPU in a given system supports fully virtualized (HVM) guests, check the flags entry in the file /proc/cpuinfo:

$ grep flags /proc/cpuinfo |

You will see vmx for Intel’s VT-x, or svm for AMD-V.

Paravirtualization provides a virtual system that includes an interface for Guest operating systems to efficiently use Host resources, without needing full virtualization of all components – instructions in the Guest OS that must be virtualized are replaced with hypercalls to the Hypervisor. Paravirtualization may include the use of a paravirtual network device driver by the Guest for passing packets more efficiently to the physical network interfaces in the host. While performance is improved, this relies on Guest OS support for paravirtualization (which Windows has historically not provided).

The question that comes up frequently is: “What is faster, PV or HVM?” If you try to search for this on the web, several top search engine results might lead you to an incorrect answer. Let me first say that both HVM and PV provide their own performance benefits:

- HVM: A processor technology for accelerating CPU virtualization (privileged instructions, syscalls) and the MMU (page tables)

- PV: A software technology where the guest kernel can use an accelerated interface for virtualized components, including disks and network interfaces, rather than emulating hardware

While it is historically true that PV was more performant, virtualization, hardware and software technology had evolved since, introducing a hybrid mode that uses elements of hardware-assisted virtualization and some paravirtualization calls when those are more efficient, delivering the best Guest machine performance today. This is called PVHVM in Xen. In future, it is expected that PVH will provide the best performance.

Some benefits of virtualization are:

- Increased resource utilization

- Reduced infrastructure costs

- Rapid provisioning

- Easier scalability and fault tolerance

- Security (through Hypervisor strong isolation)

- Live migration of a running virtual machine between different physical machines (V2V)

- Migration of a physical machine to virtual machine (P2V)

- Centralizing administration efforts

Some cons of virtualization are:

- Hardware emulation adds a performance penalty

- Host machine is a single point of failure

- Noisy neighbor effects (specifically with disk and network I/O)

Cloud Computing

Cloud computing is approach to modelling IT services. It is primarily a model in which dynamic, scalable, and virtualized resources are provided as a metered service over the Internet.

Cloud computing allows on-demand and almost instantaneous provisioning, usage and release of resources, usually with pay-per-use pricing model. One can easily add or remove resources. It’s based on converged infrastructure where arrays of servers, storage and network resources are integrated into a single optimized solution. The basic premise is efficient resources utilization in order to provide flexible capacity that enables dynamic and on-demand provisioning of resources, driven by business and application needs. This is mainly achieved by using virtualization.

There are three service models:

- Infrastructure-as-a-Service (IaaS): Provides fundamental resources such as VMs, storage, network load balancers, which are rented on-demand from a large resources pool of the Cloud provider. Clients manage the OS, but not the underlying infrastructure

- Platform-as-a-Service (PaaS): Provides the solution stack, or set of tools such as an OS, development environment, database, application and web servers. Clients manage the hosting environment, but usually not the underlying OS, network or storage

- Software-as-a-Service (SaaS): Provides application software over the network. Cloud applications may have the advantage of elasticity (automatic scaling according to traffic). Clients don’t manage the underlying infrastructure nor the OS

Containers

Containers provide lightweight process virtualization on the operating system level, where each container instance is running under the same kernel which provides process isolation and resource management. It is kind of a limited form of virtualization which isolates Guests, but doesn’t virtualize hardware.

It provides end users with an abstraction that makes each container a self contained unit of computation. Think of virtual environments that are as close as possible to a standard Linux distribution. Containers became very attractive for being lightweight, which allows for much higher density than traditional server virtualization. It’s a really good boost for multi-tenancy of applications. This allows for big cost savings as the provides can oversubscribe hardware more efficiently. Container images also boot up very quickly and have minimal CPU and memory overhead.

However, containers inherently have a weak security model. Given that container instances share the same kernel, running them in multi-tenant environment is not recommended. It is suggested to run a second layer of security with virtual machine technology, but nonetheless container security is an evolving area.

Systems like Docker define a standardized containers for software. Rather than distributing software as a package, one can distribute a container that includes the software and everything needed for it to run. Being self-contained, containers eliminate dependencies and conflicts. Rather than shipping a software package plus a list of other dependent packages and system requirements, all that is needed is the standardized container and a system that supports the standard. This greatly simplifies the creation, storage, and delivery and distribution of software.

In Linux, containers rely on cgroups for resources management, and namespaces for isolation of application’s view of the operating system environment.

In essence, containers are something like:

chroot (copy on write) + network isolation (:80 to :8000) + resource isolation (/var/myapp/log to /var/log)

Xen

Terminology

The following are key terms used in Xen.

- Paravirtualization

- Domain: A running instance of a virtual machine

- Dom0: Initial domain started by the Xen Hypervisor on boot. Dom0 has permissions to control all hardware on the system, and is used to manage the Hypervisor and the other domains. Runs as either PV or PVH Guest. Dom0 runs the Xen management toolstack. For hardware that is made available to other domains, like network interfaces and disks, it will run the backend driver, which multiplexes and forwards requests to hardware from the frontend driver in each DomU. Unless driver domain’s are being used or the hardware is passed through to the DomU, the Dom0 is responsible for running all of the device drivers for the hardware.

- DomU: Virtual machine. Unprivileged domain — a domain with no special hardware access. It must run a frontend driver for multiplexed hardware it shares with other domains.

- Frontend driver: To access devices that are to be shared between domains, like the disks and network interfaces, the DomUs must communicate with Dom0. This is done by using a two-part driver. The frontend driver must be written for the OS used in the DomU, and uses XenBus, XenStore, shared pages, and event notifications to communicate with the backend driver, which lives in Dom0 and fulfills requests. To the applications and the rest of the kernel, the frontend driver just looks like a normal network interface, disk

- Backenddriver: To allow unprivileged DomUs to share hardware, Dom0 must give them an interface by which to make requests for access to the hardware. This is accomplished by using a backend driver. Thebackend driver runs in Dom0 or a driver domain and communicates with frontend drivers via XenBus, XenStore, and shared memory pages. It queues requests from DomUs and relays them to the real hardware driver.

- PV-DomU: Paravirtualized Guests require a PV-enabled kernel and PV drivers, so the Guests are aware of the Hypervisor and can run efficiently without virtually emulated hardware (Linux kernels have been PV-enabled from 2.6.24)

- HVM-DomU: Fully virtualized guests are usually slower than paravirtualized guests, because of the required emulation. Note that it is possible to use PV drivers for I/O to speed up HVM Guests.

- QEMU: Used to provide device emulation for HVM Guests

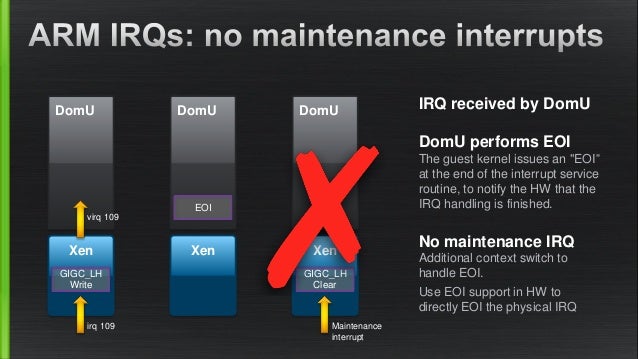

- PVHVM: PVHVM drivers completely bypass the QEMU emulation and provide much faster disk and network IO performance. To boost performance, fully virtualized HVM Guests can use special paravirtual device drivers (PVHVM drivers). These drivers are optimized PV drivers for HVM environments and bypass the emulation for disk and network I/O, thus giving you PV like (or better) performance on HVM systems. These drivers also paravirtualize interrupts and timers

- PVH: This is essentially a PV Guest using PV drivers for boot and I/O. Otherwise it uses hardware virtualization extensions, without the need for emulation. PVH has the potential to combine the best trade-offs of all virtualization modes, while simplifying the Xen architecture

- XAPI: A toolstack that exposes the XAPI interface for remote control and configuration of Guests (e.g. the xe tool)

- Xenstore: An information storage space shared between domains maintained by the Xenstored, meant for configuration and status information

- Hypercall: A “system call” to the hypervisor. A software trap from a domain to the Hypervisor, just as a syscall is a software trap from an application to the kernel. Used for privileged operations (e.g. updating page tables)

- VIF: Virtual network interface in the guest

- VBD: Virtual block device in the guest

- Event channel: An event is the Xen equivalent of a hardware interrupt. Xen uses event channels to signal events (interrupts). Interdomain communication is implemented using a frontend and backend device model interacting via event channels

Xen Overview

Xen is a type-1 Hypervisor that provides server virtualization.

It is used in many infrastructure and hosting providers such as Amazon Web Services, RackspaceCloud and Linode. The free and open-source version of Xen is developed by the global open-source community named Xen Project, under Linux Foundation, and there are also different commercial distributions available such as Citrix XenServer and Oracle VM. The first public release of Xen was made in 2003.

Xen supports five different approaches to running the Guest operating system:

- PV (Paravirtualization): Guests run a modified operating system that uses a special hypercall ABI interface. Guests can run even on CPUs without support for virtualization features. Avoids the need to emulate full set of hardware. Paravirtualization is integrated in Linux kernel 2.6.23 version

- HVM (Hardware Virtual Machine): Hardware-assisted virtualization (Intel VT-x, AMD-V, ARM v7A and v8A) makes it possible to run unmodified guests such as Microsoft Windows

- HVM with PV drivers: HVM extensions often offer instructions to support direct calls by a PV driver into the hypervisor, providing performance benefits of paravirtualized I/O

- PVHVM: HVM with PVHVM drivers. PVHVM drivers are optimized PV drivers for HVM environments that bypass the emulation for disk and network IO. They additionally make use CPU functionality such as Intel EPT or AMD NPT support

- PVH: PV in an HVM “container”

Xen Project uses QEMU as device model, that is, the software component that takes care of emulating devices (like the network card) for HVM Guests. The system emulates hardware via a patched QEMU “device manager” (qemu-dm) daemon running as a backend in Dom0. This means that the virtualized machines see an emulated version of a fairly basic PC. In a performance-critical environment, PV-on-HVM disk and network drivers are used during normal Guest operation, so that the emulated PC hardware is mostly used for booting.

Historically Xen contained a fork of QEMU with Xen support added, known as ‘qemu-xen-traditional’ in the xl toolstack. However since QEMU 1.0 support for Xen has been part of the mainline QEMU and can be used with Xen from 4.2 onwards. The xl toolstack describes this version as ‘qemu-xen’, and this became the default from Xen 4.3 onward.

Virtual devices under Xen are provided by a split device driver architecture. The illusion of the virtual device is provided by two co-operating drivers: the frontend, which runs an the unprivileged domain and the backend, which runs in a domain with access to the real device hardware (often called a driver domain; in practice Dom0 usually fulfills this function).

The frontend driver appears to the unprivileged Guest as if it were a real device, for instance a block or network device. It receives I/O requests from its kernel as usual, however since it does not have access to the physical hardware of the system it must then issue requests to the backend driver. The backend driver is responsible for receiving these I/O requests, verifying that they are safe and then issuing them to the real device hardware. When the I/O completes the backend notifies the frontend that the data is ready for use; the frontend is then able to report I/O completion to its own kernel.

Frontend drivers are designed to be simple; most of the complexity is in the backend, which has responsibility for translating device addresses, verifying that requests are well-formed and do not violate isolation guarantees, etc.

Xen also supports virtual machine live migration between physical hosts, without loss of availability. During this procedure, it iteratively copies the memory of the virtual machine to the destination without stopping its execution.

Xen Architecture

The main components of Xen are:

- Hypervisor: Software layer that runs in the most privileged CPU mode that runs above the hardware. It boots from a bootloader (such as GNU GRUB), and loads Dom0. CPU scheduling, memory and interrupts management

- Dom0: First operating system and a virtual machine that starts after the hypervisor boots. It has special management privileges and direct access to all physical hardware components. It contains all the drives and a control stack to manage virtual machine creation,configuration and destruction. The administrator logs into the Dom0 to manage Guest virtual machines (DomUs).

- DomU: Unpriviledged domains, guests or simply virtual machines. Can run paravirtualized (PV) or fully-virtualized (HVM), depending on hardware support in the CPU

- Frontend and backend drivers

- Toolstack: Dom0 control stack that allows a user to manage virtual machine creation,configuration and destruction. Exposes an interface that is either driven by a command line console, by a graphical interface or by a cloud orchestration stack such as OpenStack or CloudStack

- QEMU: Used to provide device emulation for HVM guests

Networking in Xen is defined by the backend and frontend device model. Backend is one half of a communication end point. Frontend is the device as presented to the Guest – other half of the communication end point. VIF is a virtual interface, and it is the name of the network backend device connected by an event channel to a network frontend on the Guest.

A Xen Guest typically has access to one or more paravirtualised (PV) network interfaces. These PV interfaces enable fast and efficient network communications for domains without the overhead of emulating a real network device. A paravirtualised network device consists of a pair of network devices. The first of these (the frontend) will reside in the guest domain while the second (the backend) will reside in the backend domain (typically Dom0). The frontend devices appear much like any other physical Ethernet NIC in the Guest domain. Typically under Linux it is bound to the xen-netfront driver and creates a device ethN. The backend device is typically named such that it contains both the guest domain ID and the index of the device. Under Linux such devices are by default named vifDOMID.DEVID.

The front and backend devices are linked by a virtual communication channel, while Guest networking is achieved by arranging for traffic to pass from the backend device onto the wider network, e.g. using bridging, routing or Network Address Translation (NAT).

Virtualised network interfaces in domains are given Ethernet MAC addresses. A MAC address must be unique among all network devices (both physical and virtual) on the same local network segment (e.g. on the LAN containing the Xen host).

The default (and most common) Xen configuration uses bridging within the backend domain (typically Dom0) to allow all domains to appear on the network as individual hosts. In this configuration a software bridge is created in the backend domain. The backend virtual network devices (vifDOMID.DEVID) are added to the bridge along with an (optional) physical Ethernet device to provide connectivity off the host. The common naming scheme when using bridged networking is to leave the physical device as eth0 (or bond0 if using bonded interfaces), while naming the bridge as xenbr0 (or br0). Have a look at the image here.

Note that the xl toolstack will never modify the network configuration and expects that the administrator will have configured the host networking appropriately.

Drivers Xen Gpl Pv Driver Developers Motherboards Download

A Xen Guest can be provided with block devices. These are provided as Xen VBDs. For HVM Guests they may also be provided as emulated IDE or SCSI disks. For each block device the abstract interface involves specifying:

- Nominal disk type: Xen virtual disk (aka xvd*, the default); SCSI (sd*); IDE (hd*)

- Disk number

- Partition number

Xen installation

The Xen Project Hypervisor is available as source distribution from XenProject.org. However, you can get recent binaries as packages from many Linux and Unix distributions. The software is released approximately once every 6-9 months, with several update releases per year containing security fixes and critical bug fixes. Each source release and the source tree contain a README file in the root directory, with detailed build instructions for the Hypervisor.

Most Linux distributions contain built binaries of the Xen Project Hypervisor that can be downloaded and installed through the native package management system. If your Linux distribution includes the Hypervisor and a Xen Project-enabled kernel, it is recommended to use them as you will benefit from ease of install, good integration with the distribution, support from the distribution, provision of security updates etc. Installing the Hypervisor in a distribution typically requires the following basic steps:

- Install your distribution

- Install Xen Project package(s) or meta-package

- Check boot settings

- Reboot

After the reboot, your system will run your Linux distribution as a Dom0 on top of the Hypervisor.

Before installing a Host (Dom0) OS, it is useful to consider where you are intending to store the Guest OS disk images. This is especially important if you are intending to store the Guest disk images locally. There are two main choices for local storage of guest disk images.

- LVM and carve up your physical disk into multiple block devices, each of which can be used as a Guest disk

- Store Guest disk images as files (using either raw, qcow2 or vhd format) on a local filesystem

- Store Guest disk images remotely (NFS, iSCSI)

Drivers Xen Gpl Pv Driver Developers Motherboards Free

Using a block device based storage configuration, such as LVM, allows you to take advantage of the blkback driver which performs better than the drivers which support disk image storage (e.g. blktap or qdisk). Disk image based solutions typically offer slower performance but are more flexible in some areas (e.g. snapshotting). It is possible to mix and match the two approaches, for example by setting up the system using LVM and creating a single large logical volume with a file system to contain disk images.

Example of Debian 8 Xen installation

Install the Xen Hypervisor and Xen-aware kernel:

$ apt-get install xen-linux-system |

Change the priority of Grub’s Xen configuration script (20_linux_xen) to be higher than the standard Linux config (10_linux):

$ dpkg-divert –divert /etc/grub.d/08_linux_xen –rename /etc/grub.d/20_linux_xen |

Set the default toolstack to xl:

$ sed -i ‘/TOOLSTACK/s/=.*/=xl/’ /etc/default/xen |

Change the following parameters in /etc/default/grub to tune Dom0 CPU to grant one processor core to Dom0 and also pin it to Dom0, as well as memory configuration:

GRUB_CMDLINE_XEN_DEFAULT=”dom0_mem=767M dom0_max_vcpus=1 dom0_vcpus_pin” |

Update Grub and reboot:

$ update-grub |

To confirm successful installation, a properly set up Dom0 should report when you run xl list as root:

$ xl list |

In order to provide network access to Guest domains it is necessary to configure the Dom0 networking appropriately. The most common configuration is to use a software bridge. For example, you can have xenbr0 for Internet, and xenbr1 configured for private network. The IP configuration of the bridge device should replace the IP configuration of the underlying interface, i.e. remove the IP settings from eth0 and move them to the bridge interface. eth0 will function purely as the physical uplink from the bridge so it shouldn’t have any IP settings on it. An example of single bridged network using eth0 configured with a static local IP address in /etc/network/interfaces:

iface eth0 inet manual |

Alternatively, here’s an example of a single bridged network using eth0 configured with a local IP address via DHCP:

iface eth0 inet manual |

To bring up your bridge, issue ifup xenbr0, or restart the networking service:

$ systemctl restart networking |

Let’s create a DomU now. We’ll create a “hard disk” as a sparse file (in which the storage is allocated only when actually needed) that will grow up to 10G:

$ truncate -s 10G /disks/domU.img |

Next, we will create a DomU configuration file at /etc/xen/domU.pv that will be used to create the virtual machine :

name = “My-DomU” |

Let’s explain some of those:

- name: Unique DomU name

- memory: The amount of memory reserved for the DomU

- disk

- file: Installation media (sdb)

- file: Image file created for the domU (sda)

- vif: Defines a network controller. The 00:16:3e MAC block is reserved for Xen domains, so the last three digits must be randomly filled in

Download the Debian 8 image and mount it:

$ mkdir /iso |

Then create a PV domain using:

$ xl create -c /etc/xen/domU.pv |

This will start the Debian netinst installation via the DomU’s console, where you would configure the network using DHCP and partition the /dev/xvda virtual disk.

After you finish the installation, remove the kernel and initrd lines from /etc/xen/domU.pv, as well as the sdb disk entry, and add the bootloader line:

name = “My-DomU” |

After this, create the domain again, and you should boot into the installed Guest domain directly.

- Systems Performance: Enterprise and the Cloud, by Brendan Gregg